Introducing a new tool into your life is really hard. Whether it’s switching to a different budgeting app or learning how to use an enterprise SaaS tool, there’s a certain amount of frustration that comes with figuring out how to navigate a completely new UI. Of course, better design helps, but it can only go so far: even the world’s best designers will struggle to explain every feature of a complex product to a new user or make a prolonged multi-step process entirely pain free.

What if complex user interfaces could be simplified with AI agents?

Ramp has taken a stab at this idea with an agentic AI experiment. If you’re not familiar, Ramp is a Series D fintech company that helps businesses automate their finance operations — offering corporate cards with detailed control mechanisms, automating expenses, and allowing users to book corporate travel. In May, they shared a preview of Tour Guide, an AI agent that shows users how to do things in the product. No doubt, Ramp is cleanly designed, but as with any software, figuring out how to navigate the website and platform could be a daunting task. The Tour Guide helps ease the process:

We believe that Ramp’s Tour Guide is one of the first examples of what we call a UI assistant — an AI agent customized for and an expert in a specific product’s interface. In this post, we’ll break down the Tour Guide, take you through our conversation with one of the key engineers behind it, and discuss what we think is the future of these agentic UI assistants.

Ramp’s Tour Guide

To learn more about Tour Guide, we sat down with Alex Shevchenko from Ramp’s Applied AI team. Alex began with highlighting that its primary use was to “onboard new users”. There’s a long tail of possible actions that can be done within Ramp, and there's only so much onboarding that customer success managers (CSMs) can convey through synchronous meetings in the setup and sales process. Tour Guide thus allows instant access to an expert in the product, enabling new users to onboard and guiding them through actions within Ramp.

To build Tour Guide, Ramp started with SeeAct, a generalist web agent designed by OSU that helps users complete objectives by generating a possible action, grounding it in HTML tags, and then performing the action. For example, with the goal of booking a flight on United from the Google homepage, SeeAct would first identify that it should type United into the search bar, figure out the exact HTML element corresponding to the search bar, and then type United into it.

From there, Ramp incrementally “overfit” the agent to the Ramp website by both improving the agent itself (ex: modifying the HTML parser to reduce the set of possible actions) and optimizing the underlying Ramp code (ex: updating the frontend component library to include custom aria tags for the agent).

The end result is a customized web agent that 1) has a strong understanding of the components that live on Ramp’s website, and 2) can show a Ramp user how to carry out their intended task by autonomously executing page switches, button clicks, and text submissions.

Feature or future?*

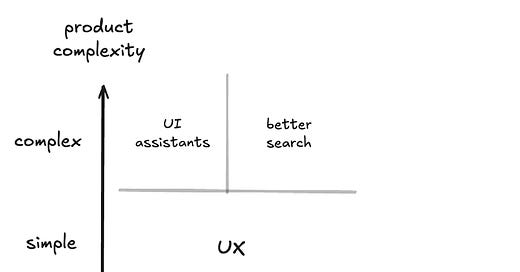

When thinking about how users understand new products, we think that there are two main axes of consideration: 1) product complexity, and 2) user familiarity. Today, if a product is simple, you rely on the UX to help guide you through the product. If a product is complex, you feel tons of pain onboarding through reading docs and watching videos, or if you’re an important B2B buyer, getting a CSM to walk you through it.

But how does AI change things?

While interactions with simple apps may stay the same, there’s a lot more opportunity for how we can craft better experiences in complex apps. Rather than relying on docs and guides, companies can now build customized UI assistants that proactively and automatically guide users through the users’ desired goals — showing new users the “ropes” of the product. From a business perspective, these assistants will help companies cut down on costs spent on onboarding and internal knowledge sharing.

What else?

Thinking bigger, one of the nice things about Slack, Notion, and Arc among others is the idea of a universal command line — that with a keystroke (Cmd + P, Cmd + K, and Cmd + T), you can directly navigate to whatever page you want to be on. We think that experts trying to complete tasks that they’ve never done before could rely on a simple semantic search over possible actions on a website. While this enables avoiding the nested menu clicks that products force us to do, it could be extended even further to be a helpful assistant; imagine typing “follow up with Jane” and instantly having your most recent email with your coworker pulled up.

But what about automation?

Coming back to the UI assistants, Alex told us that automating repetitive tasks was an anti-goal for the Ramp Tour Guide and that “if something is tedious, the UX should be changed.” This places Ramp’s work in contrast with the more common use case of web agents for automation — like what Adept aimed to do.

We’re more optimistic about the possibility of agents to enable autonomous completion of tasks. While we agree that some product frictions should be fixed via UX, we think there is a class of more tedious or repetitive tasks where UI assistants could be disproportionately valuable for users (ex: customer-specific goals where dedicating eng time wouldn’t be worth it).

In fact, we think UI assistants could eventually become something closer to an AI coworker. For instance, imagine you want to delete any stale branches older than six months in a GitHub repo. Previously, this would either require significant manual effort in-product or use of the API, but now, you could just ask the UI Assistant to do this for you while you continue working. Particularly for tasks that require some amount of “intelligent” decision making, UI assistants could be well-suited for replacing manual or engineering labor.

And what if we took it one step further? As we start to get more accustomed with agents, maybe the product itself might be abstracted away. Maybe someday, we’ll just talk to a Slack bot that takes actions in the product, relays responses and visual screenshots, and then awaits our next command.

* For the observant reader, you’ll notice we previously called ourselves “Future or Friction” and now have changed to “Feature or Future.” We’re toying around with some new names and think this one better captures the essence of what we write about, but do let us know what you think :)

While at Envoy and OfficeTogether, we spent so so much time on UX - but as the features grew, it was impossible to keep things simple and delightful so we had to throw CSMs and human labor at the problem. We just have to make sure the UI assistant doesn't feel like the next Clippy!

We are currently building this as a product. We have the same discussion internally all the time, what will the agentification of SaaS Look like?

I think there are multiple ways this could turn out:

1) Full agentification, we just talk to our computer and it shows us interfaces as we need them

2) Partial Agentification, part of the tasks that are mundane and low frequency will be done via an agent. Other Tasks that are more creative will be done in an UI.

3) No Agentification, we will keep using SaaS as we know it today.

Personally, I think, the third option is unlikely.

I also think that especially tools that are meant to create (Miro, Notion, Figma etc.) will be very similar to what you know today, because the way to communicate what you want is just very efficient.

Our thesis is that when you have an AI Agent that understands a product well you can first let it explain things, and then also provide a way towards the full agentification in the future.